We’re here for the Era of Research.

Transformer Lab is a Machine Learning Research Platform designed for frontier AI/ML workflows. Local, on-prem, or in the cloud. Open source.

More Research, Less Friction

Research workflows are often slowed down by brittle bash scripts, scattered S3 buckets, and manual SLURM templates. Transformer Lab replaces this fragmentation with a unified structure. By standardizing environment management, compute coordination, and experiment tracking, the platform ensures every project is reproducible and easy to maintain, at any scale.

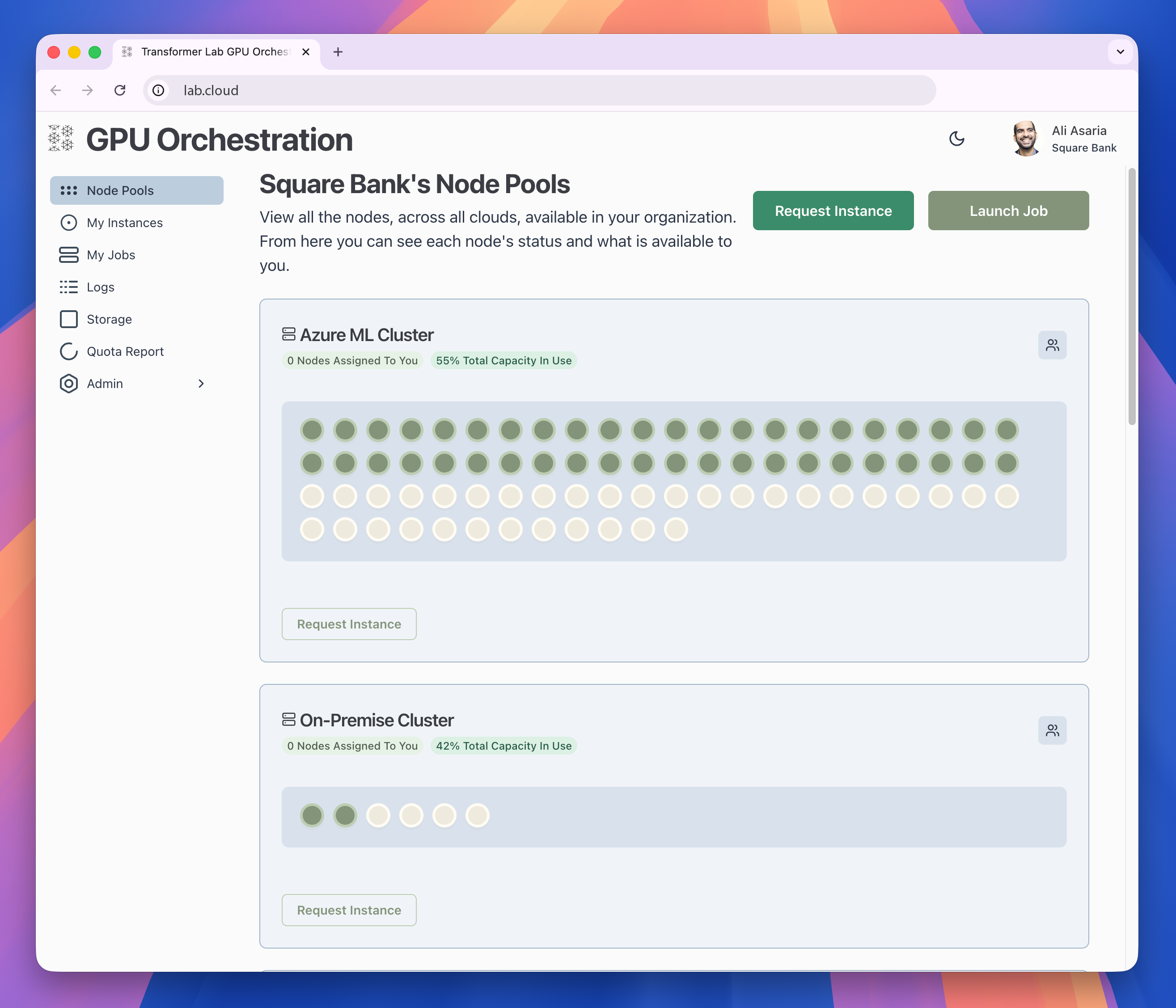

Orchestration for Distributed Training

Run jobs locally, on-prem, or in the cloud without re-writing scripts and managing SLURM templates. Designed to handle environments, dependencies, and distributed initialization so you can scale models without setup overhead. Scheduling and telemetry keep GPU usage visible.

Advanced Training & Multimodal Workflows

A unified environment for pre-training, fine-tuning, and evaluating LLMs, Diffusion, and Audio models. Includes production-ready implementations of advanced preference optimization techniques (DPO, ORPO, SIMPO, GRPO). Supports complex multimodal workflows, from ControlNets and image inpainting to fine-tuning TTS models on custom datasets.

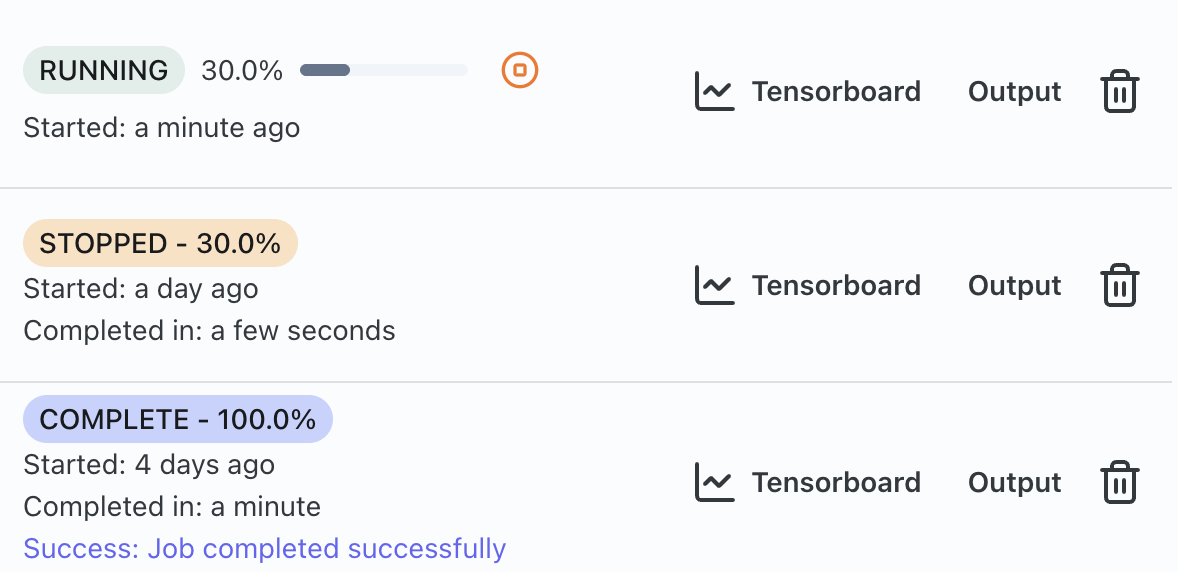

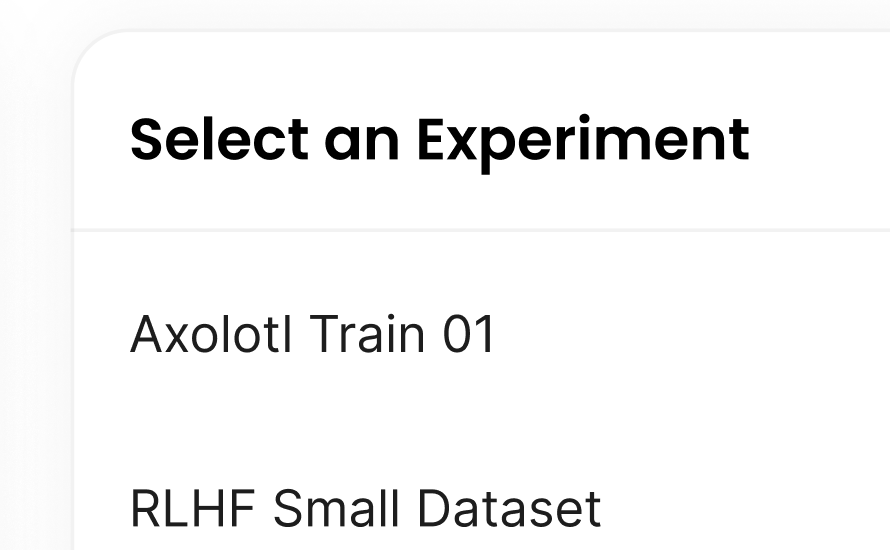

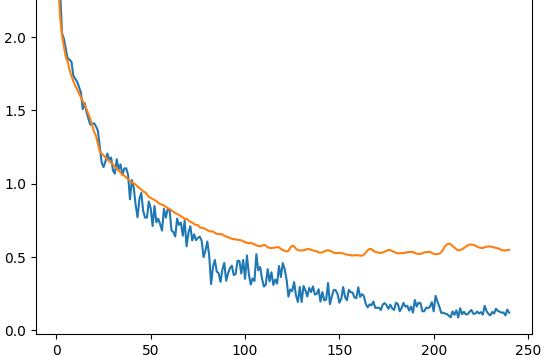

Experiment Tracking

Automatically record hyperparameters, code versions, metrics, and logs for every run, creating a complete and consistent history. Easily revisit, compare, and resume experiments without searching, as everything is in one workspace where jobs are launched and monitored, simplifying the research workflow.

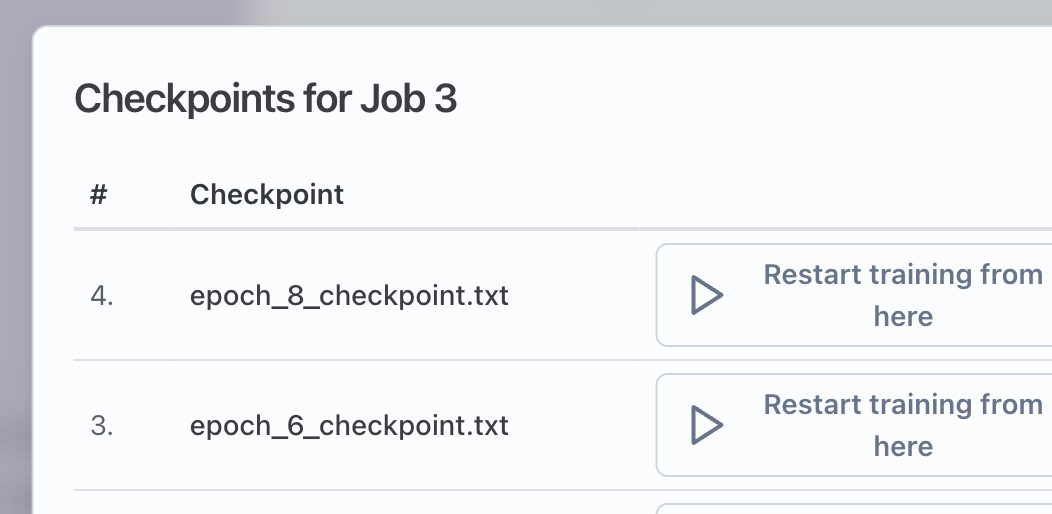

Artifact, Dataset, and Checkpoint Management

Systematically manage your artifacts, data, models, and checkpoints, tracking exactly which code, dataset version, and config produced a specific checkpoint. Resume training instantly after a failure without wasting compute. All data is synchronized across nodes and between trains, even when using ephemeral compute, ensuring your assets are always accessible.

Pre-training, Finetuning, RLHF and Preference Optimization

You can train any model on Transformer Lab. But we provide pre-written scripts for training advanced models from scratch or finetuning existing ones with production-ready implementations of DPO, ORPO, SIMPO, and GRPO that work out of the box. Complete RLHF pipeline with reward modeling handles the complex orchestration automatically, from data processing to final model outputs.

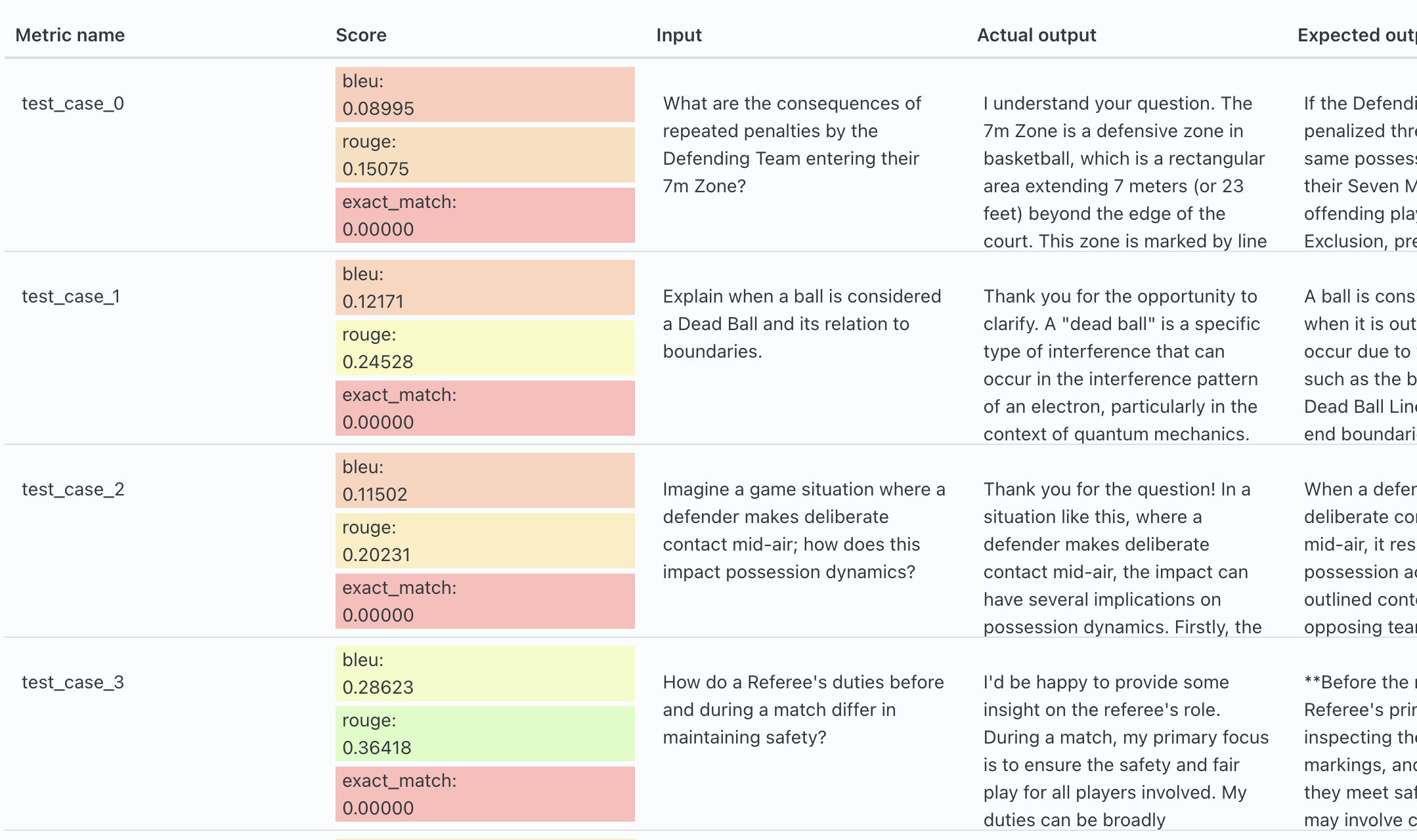

Comprehensive Evals

Measure what matters with built-in evaluation tools. Run Eleuther Harness benchmarks, LLM-as-a-Judge comparisons and objective metrics in one place. Red-team your models, visualize results over time, and compare runs across experiments with clean, exportable dashboards.

Works with the Tools You Love

Transformer Lab integrates directly with Weights & Biases, GitHub, SkyPilot, SLURM, and Kubernetes. It provides a flexible, framework-agnostic environment to run Ray, Hugging Face TRL, Unsloth, PyTorch, or MLX tasks on any compute hardware (NVIDIA, AMD, TPUs, or Apple Silicon).

Trusted by Innovative Teams

“Transformer Lab has made it easy for me to experiment and use LLMs in a completely private fashion.”

“The essential open-source stack for serious ML teams”

“SLURM is outdated, and Transformer Lab is the future.”